|

Other articles:

|

robots.txt for http://www.wikipedia.org/ and friends # # Please note: There are a lot of pages on this site, and there are # some misbehaved spiders out .

Robots.txt for http://www.dailymail.co.uk/ # All robots will spider the domain # Begin standard rules # Meltwater block User-agent: Meltwater Disallow: .

User-agent: * Crawl-delay: 10 Sitemap: http://www.whitehouse.gov/feed/media/ video-audio.

robots.txt for http://www.wikipedia.org/ and friends # # Please note: There are a lot of pages on this site, and there are # some misbehaved spiders out .

Robots.txt for http://www.dailymail.co.uk/ # All robots will spider the domain # Begin standard rules # Meltwater block User-agent: Meltwater Disallow: .

User-agent: * Crawl-delay: 10 Sitemap: http://www.whitehouse.gov/feed/media/ video-audio.

User-Agent: * Disallow: /music? Disallow: /widgets/radio? Disallow: /show_ads. php Disallow: /affiliate/ Disallow: /affiliate_redirect.php Disallow: .

To remove your site from the Wayback Machine, place a robots.txt xfile at the top level of . The robots.txt file must be placed at the root of your domain .

Jump to specifying the location in your site's robots.txt file: You can specify the location of the Sitemap using a robots.txt file. .

Also, large robots.txt files handling tons of bots are fault prone. It's easy to fuck up a complete robots.txt with a simple syntax error in one user agent .

# $Id: robots.txt,v 1.45 2010/12/08 21:56:35 scottrad Exp $ # # This is a file retrieved . See <URL:http://www.robotstxt.org/wc/exclusion.html#robotstxt> .

User-Agent: * Disallow: /music? Disallow: /widgets/radio? Disallow: /show_ads. php Disallow: /affiliate/ Disallow: /affiliate_redirect.php Disallow: .

To remove your site from the Wayback Machine, place a robots.txt xfile at the top level of . The robots.txt file must be placed at the root of your domain .

Jump to specifying the location in your site's robots.txt file: You can specify the location of the Sitemap using a robots.txt file. .

Also, large robots.txt files handling tons of bots are fault prone. It's easy to fuck up a complete robots.txt with a simple syntax error in one user agent .

# $Id: robots.txt,v 1.45 2010/12/08 21:56:35 scottrad Exp $ # # This is a file retrieved . See <URL:http://www.robotstxt.org/wc/exclusion.html#robotstxt> .

Brett Tabke experiments with writing a weblog in a text file usually read only by robots. Commentary on the world of search engine marketing.

Check the syntax of your robots.txt file for proper site indexing.

Brett Tabke experiments with writing a weblog in a text file usually read only by robots. Commentary on the world of search engine marketing.

Check the syntax of your robots.txt file for proper site indexing.

# Robots.txt file for http://www.microsoft.com # User-agent: * Disallow: /* TOCLinksForCrawlers* Disallow: /*/mac/help.mspx Disallow: /*/mac/help.mspx? .

Hundreds of web robots crawl the Internet and build search engine databases, but they generally follow the instructions in a site's robots.txt. .

# Robots.txt file for http://www.microsoft.com # User-agent: * Disallow: /* TOCLinksForCrawlers* Disallow: /*/mac/help.mspx Disallow: /*/mac/help.mspx? .

Hundreds of web robots crawl the Internet and build search engine databases, but they generally follow the instructions in a site's robots.txt. .

The robots text file, what is it? Information on the robots exclusion protocol and how to develop a properly validated robots.txt file.

May 7, 2011 . Robots.txt files (often erroneously called robot.txt, in singular) are created by webmasters to mark (disallow) files and directories of a .

Feb 10, 2011 . Cleaning up my files during the recent redesign, I realized that several years had somehow passed since the last time I even looked at the .

robots.txt file for YouTube # Created in the distant future (the year 2000) after # the robotic uprising of the mid 90's which wiped out all humans. .

Aug 23, 2010 . Web site owners use the /robots.txt file to give .

Information on the robots.txt and how it effects your website. Also includes a free robots.txt generator.

Aug 23, 2010 . This file must be accessible via HTTP on the local URL .

Learn about the robots.txt, and how it can be used to control how search engines and crawlers do on your site.

Online tool for syntax verification to robots.txt files, provided by Simon Wilkinson.

If you care about validation, this robots.txt validator is a tester that will check your robots.txt file searching for syntax errors.

The robots text file, what is it? Information on the robots exclusion protocol and how to develop a properly validated robots.txt file.

May 7, 2011 . Robots.txt files (often erroneously called robot.txt, in singular) are created by webmasters to mark (disallow) files and directories of a .

Feb 10, 2011 . Cleaning up my files during the recent redesign, I realized that several years had somehow passed since the last time I even looked at the .

robots.txt file for YouTube # Created in the distant future (the year 2000) after # the robotic uprising of the mid 90's which wiped out all humans. .

Aug 23, 2010 . Web site owners use the /robots.txt file to give .

Information on the robots.txt and how it effects your website. Also includes a free robots.txt generator.

Aug 23, 2010 . This file must be accessible via HTTP on the local URL .

Learn about the robots.txt, and how it can be used to control how search engines and crawlers do on your site.

Online tool for syntax verification to robots.txt files, provided by Simon Wilkinson.

If you care about validation, this robots.txt validator is a tester that will check your robots.txt file searching for syntax errors.

Robots.txt - Find out why you may need to create a robots.txt file in order to prevent your site from being penalized for spamming by the search engines.

The robots exclusion protocol (REP), or robots.txt is a text file webmasters create to instruct robots (typically search engine robots) on how to crawl .

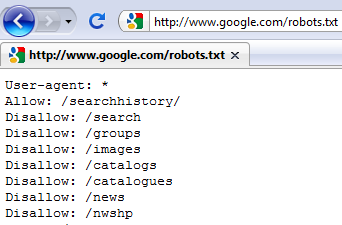

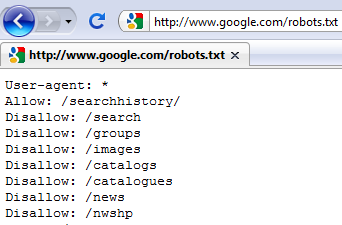

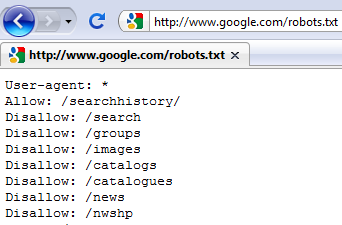

User-agent: * Disallow: /search Disallow: /groups Disallow: /images Disallow: / catalogs Disallow: /catalogues Disallow: /news Allow: /news/directory .

The quick way to prevent robots visiting your site is put these two lines into the /robots.txt file on your server: User-agent: * Disallow: / .

Jump to The robots.txt file: You can customize the robots.txt file to apply only to specific robots, and to disallow access to specific .

robots.txt generator designed by an SEO for public use. Includes tutorial.

Robots.txt - Find out why you may need to create a robots.txt file in order to prevent your site from being penalized for spamming by the search engines.

The robots exclusion protocol (REP), or robots.txt is a text file webmasters create to instruct robots (typically search engine robots) on how to crawl .

User-agent: * Disallow: /search Disallow: /groups Disallow: /images Disallow: / catalogs Disallow: /catalogues Disallow: /news Allow: /news/directory .

The quick way to prevent robots visiting your site is put these two lines into the /robots.txt file on your server: User-agent: * Disallow: / .

Jump to The robots.txt file: You can customize the robots.txt file to apply only to specific robots, and to disallow access to specific .

robots.txt generator designed by an SEO for public use. Includes tutorial.

Robots.txt. It is great when search engines frequently visit your site and index your content but often there are cases when indexing parts of your online .

Robots.txt. It is great when search engines frequently visit your site and index your content but often there are cases when indexing parts of your online .

Enter the name of your website and click "Download robots.txt from site". To find out if our robot will visit the pages given in the "URL list" window, .

Enter the name of your website and click "Download robots.txt from site". To find out if our robot will visit the pages given in the "URL list" window, .

User-agent: * Disallow: /*?action=print Disallow: */print* Disallow: */xmlrpc.

#Google Search Engine Robot User-agent: Googlebot # Crawl-delay: 10 -- Googlebot ignores crawl-delay ftl Allow: /*?*_escaped_fragment_ Disallow: /*? .

Aug 23, 2010 . Information on the robots.txt Robots Exclusion Standard and other articles about writing well-behaved Web robots.

Apr 26, 2011 . robots.txt files are part of the Robots Exclusion Standard. They tell web robots how to index a site. A robots.txt file must be placed in .

Jump to Robots.txt Optimization: Search Engines read a yourserver.com/robots.txt file to get information on what they should and shouldn't be .

# Disallow all crawlers access to certain pages. User-agent: * Disallow: /exec/ obidos/account-access-login Disallow: /exec/obidos/change-style Disallow: .

can_fetch(useragent, url)¶: Returns True if the useragent is allowed to fetch the url according to the rules contained in the parsed robots.txt file. .

May 10, 2011 . ROBOTS.TXT is a stupid, silly idea in the modern era. Archive Team entirely ignores it and with precisely one exception, everyone else .

When robots (like the Googlebot) crawl your site, they begin by requesting http: //example.com/robots.txt and checking it for special instructions.

Mar 20, 2011 . A robots.txt file restricts access to your site by search engine robots that crawl the web. These bots are automated, and before they access .

User-agent: * Disallow: /*?action=print Disallow: */print* Disallow: */xmlrpc.

#Google Search Engine Robot User-agent: Googlebot # Crawl-delay: 10 -- Googlebot ignores crawl-delay ftl Allow: /*?*_escaped_fragment_ Disallow: /*? .

Aug 23, 2010 . Information on the robots.txt Robots Exclusion Standard and other articles about writing well-behaved Web robots.

Apr 26, 2011 . robots.txt files are part of the Robots Exclusion Standard. They tell web robots how to index a site. A robots.txt file must be placed in .

Jump to Robots.txt Optimization: Search Engines read a yourserver.com/robots.txt file to get information on what they should and shouldn't be .

# Disallow all crawlers access to certain pages. User-agent: * Disallow: /exec/ obidos/account-access-login Disallow: /exec/obidos/change-style Disallow: .

can_fetch(useragent, url)¶: Returns True if the useragent is allowed to fetch the url according to the rules contained in the parsed robots.txt file. .

May 10, 2011 . ROBOTS.TXT is a stupid, silly idea in the modern era. Archive Team entirely ignores it and with precisely one exception, everyone else .

When robots (like the Googlebot) crawl your site, they begin by requesting http: //example.com/robots.txt and checking it for special instructions.

Mar 20, 2011 . A robots.txt file restricts access to your site by search engine robots that crawl the web. These bots are automated, and before they access .

# Notice: if you would like to crawl Facebook you can # contact us here: http:// www.facebook.com/apps/site_scraping_tos.php # to apply for white listing. .

Jan 27, 2011 . # robots.txt for http://arxiv.org/ and mirror sites http://*.arxiv.org/ # Indiscriminate automated downloads from this site are not .

Sitemap: http://www.cnn.com/sitemap_index.xml Sitemap: http://www.cnn.com/ sitemap_news.xml Sitemap: http://www.cnn.com/video_sitemap_index.xml Sitemap: .

Generate effective robots.txt files that help ensure Google and other search engines are crawling and indexing your site properly.

User-agent: * Allow: /ads/public/ Disallow: /ads/ Disallow: /adx/bin/ Disallow: /aponline/ Disallow: /archives/ Disallow: /auth/ Disallow: /cnet/ Disallow: .

Mar 11, 2006 . Use this module when you are running multiple Drupal sites from a single code base (multisite) and you need a different robots.txt file for .

Sep 19, 2008 . The robots.txt file is divided into sections by the robot crawler's User Agent name. Each section includes the name of the user agent .

##ACAP version=1.0 #Robots.txt File #Version: 0.8 #Last updated: 04/01/2010 # Site contents Copyright Times Newspapers Ltd #Please note our terms and .

Increase your ranking with a poper robotx.txt file.

A robots.txt file on a website will function as a request that specified robots ignore specified files or directories in their search. .

Sitemap

# Notice: if you would like to crawl Facebook you can # contact us here: http:// www.facebook.com/apps/site_scraping_tos.php # to apply for white listing. .

Jan 27, 2011 . # robots.txt for http://arxiv.org/ and mirror sites http://*.arxiv.org/ # Indiscriminate automated downloads from this site are not .

Sitemap: http://www.cnn.com/sitemap_index.xml Sitemap: http://www.cnn.com/ sitemap_news.xml Sitemap: http://www.cnn.com/video_sitemap_index.xml Sitemap: .

Generate effective robots.txt files that help ensure Google and other search engines are crawling and indexing your site properly.

User-agent: * Allow: /ads/public/ Disallow: /ads/ Disallow: /adx/bin/ Disallow: /aponline/ Disallow: /archives/ Disallow: /auth/ Disallow: /cnet/ Disallow: .

Mar 11, 2006 . Use this module when you are running multiple Drupal sites from a single code base (multisite) and you need a different robots.txt file for .

Sep 19, 2008 . The robots.txt file is divided into sections by the robot crawler's User Agent name. Each section includes the name of the user agent .

##ACAP version=1.0 #Robots.txt File #Version: 0.8 #Last updated: 04/01/2010 # Site contents Copyright Times Newspapers Ltd #Please note our terms and .

Increase your ranking with a poper robotx.txt file.

A robots.txt file on a website will function as a request that specified robots ignore specified files or directories in their search. .

Sitemap

|